Correct alpha and gamma for image processing

Published

This is a note on the correct usage of alpha blending, pre-multiplied alpha, gamma correction, and linear colors for image processing.

Correct alpha and gamma for image processing

Chris Lomont, Aug 2023

TL;DR;

-

Operations that blend or interpolate pixels should be done in linear space, not gamma compressed space. This includes resizing, rotation, and alpha blending.

-

sRGB color space transfer functions

sRGB to linear RGB (decoding, decompression)

$$C_{linear}= \begin{cases} \frac{C_{srgb}}{12.92} & C_{srgb}\leq 0.04045 \\ \left(\frac{C_{srgb}+0.055}{1.055}\right)^{2.4} & C_{srgb} \gt 0.04045 \end{cases}$$

Linear RGB to sRGB (encoding, compression)

$$C_{sRGB}= \begin{cases} 12.92C_{linear} & C_{linear}\leq 0.0031308 \\ 1.055C_{linear}^{1/2.4}-0.055 & C_{linear} \gt 0.0031308 \end{cases}$$

-

To blend RGBA image $F$ over image $G$, where $C_f$ and $\alpha_f$ are the color and alpha channels in $F$ (similarly for $G$), the new color and alpha are

-

pre-multiplied (also called associated) alpha:

$\alpha_{new}=\alpha_f+\alpha_g(1-\alpha_f)$

$\bar{C}_{new} = \bar{C}_f + \bar{C}’_g (1-\alpha_f)$

where $\bar{C} = \bar{C}\alpha$ is the pre-multiplied alpha color.

-

straight (also called non-pre-multiplied or unassociated) alpha

$\alpha_{new}=\alpha_f+\alpha_g(1-\alpha_f)$

$C_{new} = \frac{C_f \alpha_f + C_g \alpha_g(1-\alpha_f)}{\alpha_{new}}$

-

-

To blend or interpolate colors, use the pre-multiplied color components to get correct blending (equivalently, multiply by alpha, do blend, then divide back out).

-

Merging, blending, etc., in different color spaces a huge topic, rough idea is to do them in same color space.

-

Alpha should be stored linear! Most image formats, even when RGB channels are gamma compressed, still store alpha as linear.

Intro

This is a quick note gathering some thoughts about image processing that I find would be useful to have in one place. The following is useful for image or video processing as well as CG rendering. The word “image” denotes all uses in the following.

Gamma correction

Images capture light signals for human vision. Physical light can be measured as photons per unit time per unit area (or possibly per unit 3D angle, steradian). Doubling the number of photons doubles the light energy, but human vision is not linear - it is more sensitive to changes near the dark end rather than the light end (an example of Weber’s Law). As such, when storing light signals in image formats using a limited number of bits, a non-linear storage allocating more bits for dark colors allows better image reproduction. For simplicity, and because it somewhat mimics human vision, this non-linear encoding is done via a power law $V_{out}=kV_{in}^\gamma$.

This non-linearity is often called gamma, after the common math use of the Greek letter gamma ($\gamma$) used in power law expression above. Note this is not quite accurate to human vision, and later encodings use slightly better models, but still go under the term “gamma correction”.

Raising to a power a $\gamma<1$ is sometimes called an encoding gamma or gamma compression, and using a power $\gamma > 1$ is called decoding gamma or gamma expansion.

Note gamma ideas are used in many places of the imaging pipeline: gamma curves for capture systems such as cameras are called opto-electronic transfer functions (OETF) and convert scene light into a video signal, gamma curves are used for optimizing storage and transmission formats, and gamma response curves for display technology called electro-optical transfer function (EOTF) convert the picture or video signal as input into linear light outputs linear from a display. The opto-optical transfer function (OOTF) is the combination of all of these, in order to take (linear) scene light as input and output (linear) displayed light. The OOTF is usually non-linear, since the camera and output devices often have different transfer functions, but the goal is to make linear light input become linear light output.

How light is encoded (as well as color primaries) is often detailed in a color space definition. A formal and precise definition is far too much for relaying here; we’ll just stick to the most common color space used for image formats, sRGB.

The sRGB color space was designed for 1990s-2000s era systems; it defines precisely what red, green, and blue mean, how bright the brightest white is, a specific white-point, and the gamma transfer functions used for interpreting bits in an image. Images are usually stored with each color channel RGB as a byte each, i.e., values are integers in 0 to 255 per channel. For the rest of this document, these are scaled to floating point values in 0-1 via cFloat = cByte/255.0. To store as bytes on output, colors are often (somewhat incorrectly) converted with cByte = cFloat*255.0, which has a slight bias away from endpoints. A nicer method is cByte = clamp(floor(cFloat*256),0,255) .

For image processing where light is combined, for must uses those operations should be done in linear space, requiring changing from the gamma encoded sRGB values into linear light via

sRGB to linear RGB (decoding, decompression)

$$C_{linear}= \begin{cases} \frac{C_{srgb}}{12.92} & C_{srgb}\leq 0.04045 \\ \left(\frac{C_{srgb}+0.055}{1.055}\right)^{2.4} & C_{srgb} \gt 0.04045 \end{cases}$$

Linear RGB to sRGB (encoding, compression)

$$C_{sRGB}= \begin{cases} 12.92C_{linear} & C_{linear}\leq 0.0031308 \\ 1.055C_{linear}^{1/2.4}-0.055 & C_{linear} \gt 0.0031308 \end{cases}$$

where $C$ represents each color channel RGB. The reason these (and many other) transfer functions are piecewise linear, then a gamma expression, is to prevent infinite slope at the origin for some calculations, and also to model the eye a little better.

In photography, a stop is a doubling of linear light. An 8-bit linear image would have 8 stops of light from the darkest to the brightest light. A nice DSLR with a 14 bit sensor has (approximately, sensors are mostly linear…) 14 stops. The human eye has around 20-21 stops. The sRGB gamma curve allows encoding around 11 stops (11 bits) of linear light efficiently (meaning it looks good to human eyes!) in 8 bits of storage.

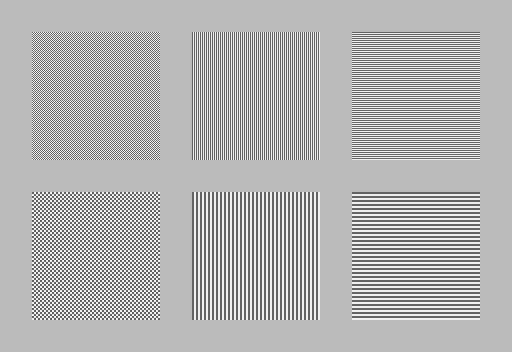

Here is one way to test gamma correctness. This uses color 32 (out of 0-255) as the dark color, 127 as the middle color, and 222 (= same distance from middle as bottom). Some places use colors 0 and 255, but then the middle should be (0+255)/2 = 127.5, not an integer. I chose colors not at the endpoints, since those map to themselves under sRGB gamma correction, while 32 and 222 do not.

The image has middle color (127, = 187 under sRGB) background, a 1x1 checkerboard of dark (32 = 99 sRGB) and light (222 = 240 sRGB) in upper square, then 2x2 checkerboard under that, then 1 and 2 width vertical lines in second column, then 1 and 2 height horizontal lines. All parts should look the same level of gray under correct viewing.

Things that can go wrong: you’re not viewing 1 pixel per 1 pixel of screen (100%), your viewing software is not correct, your monitor is not correct (dim power saving screens will make a mess).

Two nice reads on gamma with excellent illustrative images are at [5] and [6].

Alpha blending

Besides the common three color RGB channels, a fourth channel denoting transparency or blending amount can be present (usually called alpha and written as a RGBA image). Alpha usually represents some form of transparency or information about “partial” pixel coverage.

The name “alpha” comes from the common math use of the Greek letter alpha ($\alpha$) to linearly blend between two things $P$ and $Q$ with $(1-\alpha) P + \alpha Q$ , where as $\alpha$ goes from $0$ to $1$, the blend goes from $P$ to $Q$.

Alpha is mostly used to blend one image $F$ over another image $G$. Note one image ($F$) is considered on top, or foreground, and the other ($G$) is behind, or bottom, or the background. This can be done for many images, i.e., images $F$ over $G$ over $H$. For general use, this should be associative, that is, the order of composition does not matter: $(F \text{ over } G)\text{ over } H = F\text{ over }(G\text{ over }H)$. This allows compositing foreground reusing the result, or compositing something over the background, and reusing the result in further work, or any combinations of this for many items.

The term “over” comes from the famous Porter-Duff paper [3] describing 12 such operations for the possible $F$ and $G$ composition methods, of which “over” is the most commonly used.

The first derivation of how to compute the “over” operation is called the straight alpha (also called non-pre-multiplied alpha or unassociated alpha) case for reasons given below.

Suppose $\alpha$ denotes the ratio 0-1 of how much of a pixel is covered by some item of interest, such as the edge of a circle. Alpha is also used to mean some form of transparency, such as colored glass or fog. Since these uses result in the same math [4], we can use alpha for both. Note that a careful representation of alpha really requires one for each channel, so a physically accurate “transparency” for RGB would need three different alpha, one for each channel. To save space, and since it’s nicely useful, we’ll follow common convention and only have one alpha channel.

By convention, alpha of 1 means the pixel is fully covered (i.e., the normal use of a pixel color) and 0 means fully transparent.

Suppose we have a pixel from an image $F$ with RGB colors in 0-1 and alpha in 0-1, use $C_f$ for each color channel in $F$, $\alpha_f$ for the alpha channel of $F$, and similarly for the corresponding pixel from image $G$. With no further info on how the partial items overlap, we assume the overlap image for drawing $F$ over $G$ is this:

![]()

This assumption of how the situation looks, when we have no further information, is very well supported mathematically (covered later). However, if we do know more about the overlap, such as we’re rendering a known edge of a circle over a known line, we could compute a more accurate formula for that specific pixel, and this is done in many cases for really high quality renders. We assume all we have here is the 4 channel RGBA images.

From the image above, the new pixel coverage is has area $\alpha_{new}=\alpha_f+(1-\alpha_f)\alpha_g$ by simple geometry, so we use this as the new alpha.

The color is a bit more tricky. The naïve color looks like it should be , from the geometry, $C_f\alpha_f + C_g\alpha_g(1-\alpha_f)$. But this is a little wrong: consider the example of a red pixel (1,0,0,1/2) over another red pixel (1,0,0,1/2). The result should be the same full red, but only covering $1/2 + (1 - 1/2)*(1/2)=3/4$ of the pixel. So we want the answer to be (1,0,0,3/4). But the naïve color formula gives the red as 3/4.

The answer is we need to scale the final color by the area covered, i.e., dividing the guessed color by the new alpha (this can also be derived carefully from physical based light arguments), to get the correct equations for the “over” operation:

straight (also called non-pre-multiplied or unassociated) alpha (f on top, g on bottom):

$\alpha_{new}=\alpha_f+\alpha_g(1-\alpha_f)$

$C_{new} = \frac{C_f \alpha_f + C_g \alpha_g(1-\alpha_f)}{\alpha_{new}}$

Note the amount of operations needed to compute this per pixel: several multiples and a divide per pixel, and also requires avoiding division by 0 or numerical overflow checks. If many images are composited, or if the same image is used a lot of times, this is more operations than we really need.

A better way, called pre-multiplied alpha (also called associated alpha, since it has “associated” the alpha to the color channels) replaces each color channel $C$ with one multiplied by the alpha $\bar{C}=C\alpha$. This simplifies and unifies the expressions, which now take less compute steps per pixel in a possibly long set of image processing steps:

pre-multiplied (also called associated) alpha (f on top, g on bottom)

$\alpha_{new}=\alpha_f+\alpha_g(1-\alpha_f)$

$\bar{C}_{new} = \bar{C}_f + \bar{C}’_g (1-\alpha_f)$

Note this should not be called “associative alpha,” which appears incorrectly in some places. Perhaps it gets confused since people often (incorrectly) claim that using pre-multiplied alpha is required to make composition associative, but this is not true - both pre-multiplied and straight alpha make the operations associative if done correctly. The latter is just less work in most cases.

Pros of using pre-multiplied alpha:

- all four RGBA components are the same equation, allowing treating as a 4-vector

- composition associativity is cleaner

- is less operations for repeated work

- makes algorithms for blending, resizing, and other filtering much simpler to write

- allows making “emissive” colors such as (1,0,0,0) which adds red to a scene when used in the pre-multiplied color method. Note this type of feature support means it is possible to have color channels with values larger than the alpha channel even though the colors are called pre-multiplied. This type of effect requires special handling when using straight alpha.

- helps avoid purple or black edges appearing in operations from incorrect math.

Cons of using pre-multiplied alpha:

- sometimes a user wants the alpha channel to mean something else, and would not like that data to change then RGB channels

- storing as bytes removes a lot of possible color information, leading to slightly worse results.

Final points:

-

Now, here is a very important part: when doing image operations that create new pixels, such as resizing, rotation, blending as the above, using pre-multiplied alpha makes things much easier. And no matter what, you must use the equivalent form to get correct new pixels.

-

Alpha channels are almost always stored in linear, even when colors are gamma encoded.

Marc Levoy has a nice history of these concepts on his website. The summary is:

- 1977: Dr. Alvy Ray Smith and Dr. Ed Catmull (founders of Lucasfilm’s computer graphics group and Pixar) coined term alpha working for Lucasfilm for blending between images, from the common math symbol for linear blending [1]

- 1980: Bruce Wallace and Marc Levoy, at Hanna-Barbara Productions, showed how to blend two partially opaque images into a new partially opaque image (1981 SIGGRAPH paper) [2]. These are the non-pre-multiplied alpha equations.

- 1984: Tom Porter and Tom Duff, at Lucasfilm, showed pre-multiplied made equations and compute work simpler [3]

See the references for a lot of examples of what happens when alpha is handled incorrectly.

Finally, here’s the idea of a proof for showing the blending arguments laid out above are reasonable:

Doing careful math (integrating over all items…) you can check the coverage arguments above are reasonable. A simple way to see this mentally is to consider the pixel divided into many equal sized squares, and the color $C_f$ and $C_g$ are equally and randomly spread over these squares. Then any square has probability $\alpha_f$ to have color $C_f$ on top, and similarly, probability $\alpha_g$ to have color $C_g$ on the bottom pixel. Thus the probability of having both is $\alpha_f\alpha_g$, of having $C_f$ and not $C_g$ is $\alpha_f(1-\alpha_g)$, etc., which is exactly the image used in the derivation.

Practical matters

Be careful on reading and writing and interpreting image formats. Some pointers

- PNG images store

- RGB data in sRGB (by default, they can use other color spaces too)

- Alpha channel should be linear from the PNG spec.

- Jpeg images

- should be treated as sRGB unless there is a different color profile embedded

- do not have an alpha channel (except by nonstandard extensions)

- GIF does not define a color space, often people treat them as sRGB.

- Be careful multiplying RGB bytes from an image by the alpha byte to pre-multiply. For best accuracy this should be done by converting from sRGB (or your image colorspace) to linear, premultipliy, then convert back to your color space and store. Careful rounding keeps the highest quality.

References

[1] Smith, A.R., Painting Tutorial Notes, SIGGRAPH ‘79 course on Computer Animation Techniques, 1979, ACM.

[2] Wallace, B.A., [Merging and Transformation of Raster Images for Cartoon Animation](papers\Wallace - 1981 - Merging and Transformation of Raster Images for Cartoon Animation.pdf), Proc. ACM SIGGRAPH ‘81, Vol 15, No. 3, 1981, ACM, pp. 253-262.

[3] Porter, T., Duff., T., [Compositing digital images](papers/Porter, Duff - 1984 - Compositing Digital Images.pdf), Proc. ACM SIGGRAPH ‘84, Vol. 18, No. 3, 1984, ACM, pp. 253-259.

[4] Glassner, Andrew, [Interpreting Alpha](papers/Glassner- 2015 - Interpreting Alpha.pdf), Journal of Computer Graphics Techniques Vol. 4, No. 2, 2015 .

[5] https://blog.johnnovak.net/2016/09/21/what-every-coder-should-know-about-gamma/

[6] http://www.ericbrasseur.org/gamma.html?i=1

https://en.wikipedia.org/wiki/Alpha_compositing

https://en.wikipedia.org/wiki/SRGB

https://en.wikipedia.org/wiki/Gamma_correction

https://www.cambridgeincolour.com/tutorials/gamma-correction.htm

https://ciechanow.ski/alpha-compositing/

https://graphics.stanford.edu/papers/merging-sig81/

https://www.realtimerendering.com/blog/gpus-prefer-premultiplication/

https://hacksoflife.blogspot.com/2022/06/srgb-pre-multiplied-alpha-and.html

https://courses.cs.washington.edu/courses/cse576/03sp/readings/blinnCGA94.pdf

https://developer.nvidia.com/content/alpha-blending-pre-or-not-pre

https://stackoverflow.com/questions/32889512/explain-how-premultiplied-alpha-works

https://iquilezles.org/articles/premultipliedalpha/

https://news.ycombinator.com/item?id=33301471

https://tomforsyth1000.github.io/blog.wiki.html (search for 2 premultiplied alpha articles)

Comments

Comment is disabled to avoid unwanted discussions from 'localhost:1313' on your Disqus account...